ClassifyGxT: classifying gene-by-treatment interactions

Yuriko Harigaya, Michael Love, William Valdar

2025-09-19

Source:vignettes/classifygxt.Rmd

classifygxt.RmdIntroduction

The ClassifyGxT software is an implementation of a Bayesian model selection (BMS) framework for classifying GxT interactions. The method was developed primarily for molecular count phenotypes, such as RNA-seq and ATAC-seq data, although it can be used for other types of phenotypes.

- The input is a list of feature-SNP pairs for which significant GxT interactions have been identified by the standard response molecular QTL mapping procedure (see Alasoo et al. (2018) and Matoba et al. (2024) for example). For each of the feature-SNP pairs, individual genotype and phenotype data are required.

- The main output is the posterior probability for different GxT types. See Overview on the main page for the model categories representing the types of GxT interactions.

- The package provides functions to process a single feature-SNP pair. In practice, we recommend running parallel jobs on the computer cluster with appropriate grouping.

Quick start

We first show the minimal steps to run ClassifyGxT. We assume that

data is a list object, as described in Input

data. See Performing

BMS for setting hyperparameter values.

Input data

For each feature-SNP pair, a list object containing the following elements needs to be generated:

-

y: A vector of length containing preprocessed molecular count phenotypes, where is the number of samples. See Data preprocessing below for preprocessing. -

g: A vector of length containing genotypes coded as to represent the number of minor (alternative) alleles or the imputation-based allelic dosage in . -

t: A vector of indicator variables for the treatment. -

subject: An optional character or numeric vector corresponding to the subjects. This is necessary when the model includes donor or polygenic (kinship) random effects. -

feat.id: An optional character string representing the feature. -

snp.id: An optional character string representing the SNP.

Note that the samples must be in the same order in the

y, g, t, and subject

elements and that the list can contain additional elements.

Data preprocessing

For molecular count phenotypes, the raw count data from sequencing experiments can be processed as follows:

- Step 1: make a matrix of feature counts with rows and columns representing features and samples, respectively

- Step 2: scale the count data by the library size according to Palowitch et al. (2018)

- Step 3: optionally filter features based on the scaled counts according to Matoba et al. (2024)

- Step 4: transform using the function

- Step 5: regress out the treatment indicator (and optionally other fixed-effect covariates, such as sex and age of the subjects)

- Step 6: perform principal component analysis (PCA)

- Step 7: regress out an appropriate number of PCs (and the same set of fixed-effect covariates as in step 5) from the original, transformed data (from step 4)

Note that ClassifyGxT currently does not accommodate covariates other than the donor or polygenic random effects. Other covariates need to be regressed out prior to performing BMS (step 7). See Including covariates for details. For determining the number of PCs, see Matoba et al. (2024).

Generating data

In this section, we simulate data using make_data(). The

simulated data will have the same format as discussed in the previous

section and will be used for demonstrating how to run BMS in the next

sections. For simplicity, we omit random effects. See the function

documentation (?make_data) for how to include random

effects.

We first specify the number of feature-SNP pairs for each of the eight models.

(model.name <- get_model_names())

#> [1] "0,0,0" "1,0,0" "0,1,0" "1,1,0" "0,0,1" "1,0,1" "0,1,1" "1,1,1"For this demonstration, we only make data for 10 pairs for each model.

num <- rep(10, 8)

names(num) <- model.name

num

#> 0,0,0 1,0,0 0,1,0 1,1,0 0,0,1 1,0,1 0,1,1 1,1,1

#> 10 10 10 10 10 10 10 10With this specification, the output will be a list of 80 lists. Each

of the lists corresponds to each feature-SNP pair. The first ten lists

are based on "0,0,0", the next ten are based on

"1,0,0", and so forth.

The genotype, treatment, and interaction effects will be drawn from Normal distributions with a zero mean and user-specified standard deviations. These values represent “typical” magnitudes of the effects. We specify standard deviations of the effects as follows.

sd.g <- 1.5 # genotype

sd.t <- 2.0 # treatment

sd.gxt <- 1.0 # interaction

sd <- c(sd.g, sd.t, sd.gxt)Note that the residual error standard deviation , which represents a typical magnitude of noise, is set to 1 by default.

The following code generates a data frame specifying the mapping between samples, subjects, and treatment conditions.

n.sample <- 160 # number of samples

n.sub <- 80 # number of subjects

anno <- data.frame(

sample=seq_len(n.sample),

subject=rep(seq_len(n.sub), each=2),

condition=rep(c(0, 1), times=n.sub))

head(anno)

#> sample subject condition

#> 1 1 1 0

#> 2 2 1 1

#> 3 3 2 0

#> 4 4 2 1

#> 5 5 3 0

#> 6 6 3 1Now we can generate fake data using make_data().

data.list <- make_data(

anno=anno, fn="nonlinear",

num=num, sd=sd)Performing BMS

In this section, we perform BMS using do_bms(). For

simplicity, we do not model random effects. See Including

random effects below for how to include random effects.

We first specify the “model prior.” Note that this is an optional argument and that, by default, a uniform prior is used. This specification reflects a prior belief that all models are equally likely. Here, we explicitly specify the default model prior for demonstration purposes.

p.m <- rep(1/8, 8)We also specify the “effect prior” by choosing the values of the , , and hyperparameters, which correspond to the genotype, treatment, and GxT interaction effects, respectively. The hyperparameters represent our prior beliefs about the effects relative to the residual error standard deviation (noise). Specifically, we place priors, In practice, we recommend optimizing the values via an empirical Bayes approach (see Optimizing the effect prior hyperparameters below). Here, we set them to the same values as the effect standard deviations in the data-generating models. This is a reasonable choice since we set the residual error standard deviation to 1 when generating the data.

phi.g <- 1.5 # genotype

phi.t <- 2.0 # treatment

phi.gxt <- 1.0 # interaction

phi <- c(phi.g, phi.t, phi.gxt)The following code performs BMS for the 71st data using

do_bms() with nonlinear regression

(fn="nonlinear"). Recall that the 71-80th data were

generated based on the eighth model, "1,1,1" (see Generating

data above). The method input argument must be set to

either "mcmc.bs", which represents Markov Chain Monte Carlo

(MCMC) followed by bridge sampling, or "map.lap", which

represents MAP estimation followed by Laplace approximation. Although

the latter method can be orders of magnitude faster, we recommend using

"mcmc.bs" for the final results, if possible, since it is

not straightforward to obtain error bounds for Laplace

approximation.

k <- 71

data <- data.list[[k]]

res <- do_bms(

data=data, p.m=p.m, phi=phi,

fn="nonlinear", method="map.lap")This returns a list object containing the following elements.

names(res)

#> [1] "fn" "ranef" "rint" "p.m"

#> [5] "seed" "ln.p.y.given.m" "ln.p.y" "p.m.given.y"

#> [9] "optim.list"-

fn- A character string specifying the function. -

ranef- A logical. -

rint- A logical as to whether the phenotypes have been RINT-transformed. -

p.m- A vector of hyperparameters of the model prior. -

seed- A integer specifying a seed fo RNG. -

ln.p.y.given.m- A named vector of the log marginal likelihood given each model. If method is set to"map.lap", the value is shifted by a constant. -

ln.p.y- A scalar value of the log marginal likelihood. If method is set to"map.lap", the value is shifted by a constant. -

p.m.given.y- A named vector of posterior probability of the models. -

optim.list- A list of outputs from the optim function from the stat package containing MAP estimates and Hessian. This element is included only when method is set to"map.lap".

See the function documentation (?do_bms) for more

details.

For multiple feature-SNP pairs, we recommend processing the data in batches using a workflow such as Snakemake. Within a batch, BMS can be performed in serial or parallel processes. For a moderate number of feature-SNP pairs, the following code can be used.

res.list <- lapply(

X=data.list, FUN=do_bms, p.m=p.m, phi=phi,

fn="nonlinear", method="map.lap")Extracting the posterior probability of the models

We can extract the posterior probability of the model as follows.

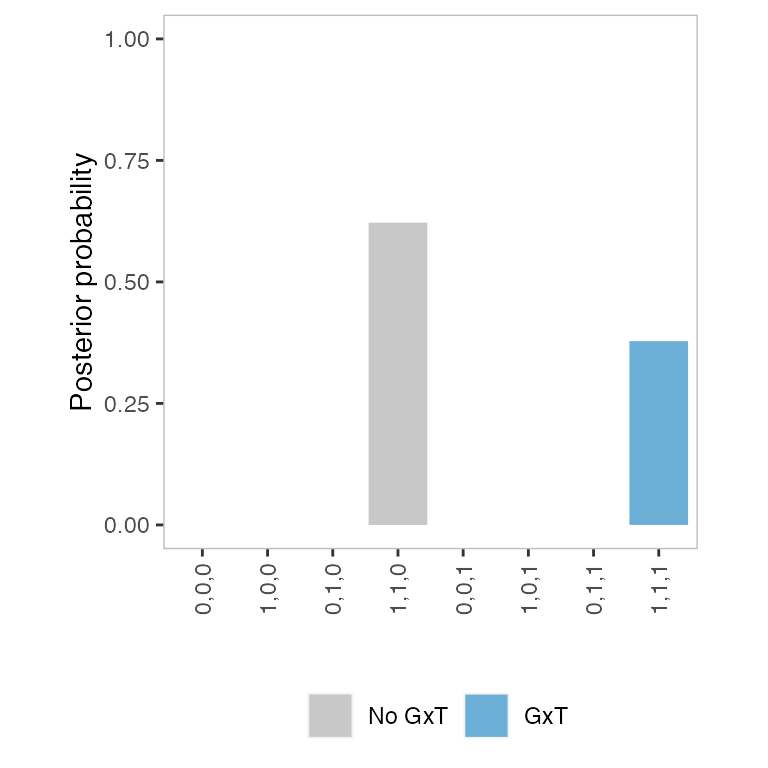

(pp <- get_pp(res))

#> 0,0,0 1,0,0 0,1,0 1,1,0 0,0,1 1,0,1

#> 2.381904e-31 3.102070e-13 1.859044e-25 6.219652e-01 6.612267e-19 5.423350e-12

#> 0,1,1 1,1,1

#> 3.940914e-14 3.780348e-01Note that, in this particular example, the highest posterior

probability is assigned to the fourth model "1,1,0", even

though the data-generating model is “1,1,1”. However, we

will see that BMS tends to choose the correct models across multiple

feature-SNP pairs (Heatmap

of the posterior probability of the models below).

The following code can be used to extract the posterior probability from a list object storing results for multiple feature-SNP pairs.

pp.mat <- sapply(X=res.list, FUN=get_pp)In this case, the output is a matrix that contains rows and columns corresponding to the models and the feature-SNP pairs, respectively.

Including covariates

Including fixed-effect covariates

In molecular QTL analyses on experimental data, it is important to control for confounding factors, such as molecular phenotype PCs and PEER factors to avoid spurious associations. We recommend identifying PCs according to the procedure described in Data preprocessing (steps 1 - 6). For computational reasons, ClassifyGxT does not currently accommodate covariates other than the donor or polygenic random effects. The fixed-effect covariates need to be regressed out prior to performing BMS as in Matoba et al. (2024). See step 7 in Data preprocessing. Note that we do not typically consider fixed-effect covariates in simulation experiments.

Including random effects

In analyses of experimental data, it is ofen desirable to include

random effects in the model. To include donor random effects, we first

need to run get_tu_lambda().

tu.lambda <- get_tu_lambda(data)We then use the output as input when running

do_bms().

res.ranef <- do_bms(

data=data, p.m=p.m, phi=phi,

fn="nonlinear", method="map.lap",

tu.lambda=tu.lambda)We can also include polygenic random effects rather than donor random

effects to accout for the genetic relatedness and popuplation structure.

See the function documentation (?get_tu_lambda) for

details.

Visualizing the results

Generating a genotype-phenotype (GP) plot

We recommend visually inspecting the model fit by generating scatter

plots, which we call a “GP” plot, for each feature-SNP pair (or SNP). To

make a GP plot, we first create a list of data frames using

format_gp().

gp.plot <- format_gp(data=data, fit=res)We then create a ggplot2 object using

make_gp_plot(). The appearance of the plot can be modified

as usual.

p1 <- make_gp_plot(gp=gp.plot)

p1

Generating a posterior probability (PP) plot

It is also useful to generate a barplot of posterior probability,

which we call a “PP” plot. To make a PP plot, we first create a data

frame using format_pp().

pp.plot <- format_pp(fit=res)We then create a ggplot2 object using

make_pp_plot(). The appearance of the plot can be modified

as usual.

p2 <- make_pp_plot(pp=pp.plot)

p2

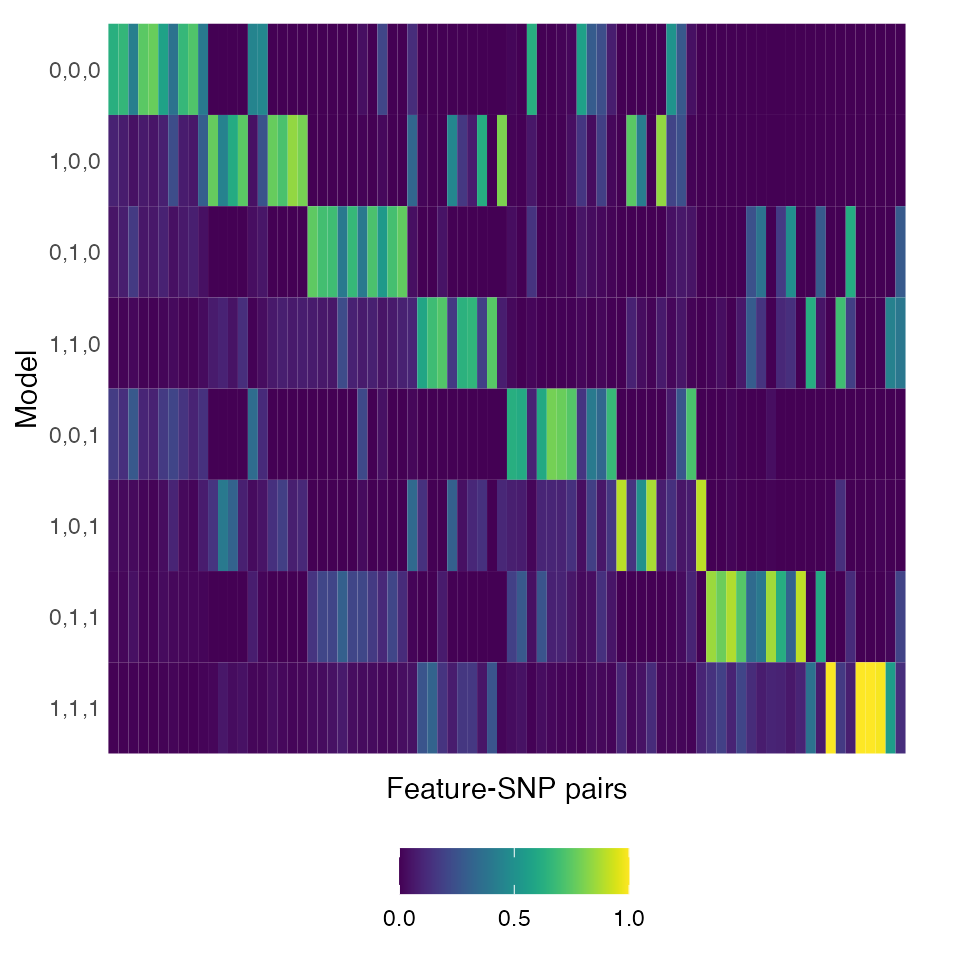

Heatmap of the posterior probability of the models

We can generate a heatmap to visualize posterior probability across

multiple feature-SNP pairs using make_heatmap(). Note that

we transpose the matrix using t() in the following

code.

p3 <- make_heatmap(t(pp.mat))

p3 + theme(legend.position="bottom",

legend.title=element_blank())

As expected, we see that the highest probability tends to be assigned

to the correct (i.e., data-generating) model. That is, the MAP model

tends to be "0,0,0" for the first ten feature-SNP pairs in

the left-most columns, "1,0,0" for the 11-20th pairs, and

so forth.

Optimizing the effect prior hyperparameters

We recommend optimizing the effect prior hyperparmeters by an

empirical Bayes approach. In this approach, we obtain the

,

,

and

hyperparameter values that maximize the sum of

-transformed

marginal likelihood across all feature-SNP pairs that are being

analyzed. The most conceptually straightforward method is to perform a

grid search (see our

manuscript for details). We recommend using a workflow such as Snakemake. For

faster computation, we use MAP estimation and Laplace approximation,

setting method="map.lap" (see Performing

BMS above). From the BMS result for a feature-SNP pair, the

of the marginal likelihood can be extracted as follows.

(ln.p.y <- res$ln.p.y)

#> [1] -257.3321Since error bounds for Laplace approximation are not easily obtained, we recommend rerunning MCMC and bridge sampling with optimal hyperparameter values for the final result.

Session information

sessionInfo()

#> R version 4.4.3 (2025-02-28)

#> Platform: aarch64-apple-darwin20

#> Running under: macOS Sequoia 15.6.1

#>

#> Matrix products: default

#> BLAS: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRblas.0.dylib

#> LAPACK: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRlapack.dylib; LAPACK version 3.12.0

#>

#> locale:

#> [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

#>

#> time zone: America/New_York

#> tzcode source: internal

#>

#> attached base packages:

#> [1] stats graphics grDevices utils datasets methods base

#>

#> other attached packages:

#> [1] classifygxt_0.1.1 ggplot2_3.5.1

#>

#> loaded via a namespace (and not attached):

#> [1] gtable_0.3.6 xfun_0.51 bslib_0.9.0

#> [4] QuickJSR_1.6.0 htmlwidgets_1.6.4 inline_0.3.21

#> [7] lattice_0.22-6 vctrs_0.6.5 tools_4.4.3

#> [10] generics_0.1.3 stats4_4.4.3 parallel_4.4.3

#> [13] tibble_3.2.1 pkgconfig_2.0.3 Matrix_1.7-2

#> [16] desc_1.4.3 RcppParallel_5.1.10 lifecycle_1.0.4

#> [19] farver_2.1.2 compiler_4.4.3 stringr_1.5.1

#> [22] hrbrthemes_0.8.7 textshaping_1.0.0 Brobdingnag_1.2-9

#> [25] munsell_0.5.1 codetools_0.2-20 fontquiver_0.2.1

#> [28] fontLiberation_0.1.0 htmltools_0.5.8.1 sass_0.4.9

#> [31] yaml_2.3.10 Rttf2pt1_1.3.12 pillar_1.10.1

#> [34] pkgdown_2.1.1 jquerylib_0.1.4 extrafontdb_1.0

#> [37] cachem_1.1.0 StanHeaders_2.32.10 bridgesampling_1.1-2

#> [40] viridis_0.6.5 fontBitstreamVera_0.1.1 rstan_2.32.7

#> [43] tidyselect_1.2.1 digest_0.6.37 mvtnorm_1.3-3

#> [46] stringi_1.8.4 dplyr_1.1.4 labeling_0.4.3

#> [49] extrafont_0.19 fastmap_1.2.0 grid_4.4.3

#> [52] colorspace_2.1-1 cli_3.6.4 magrittr_2.0.3

#> [55] loo_2.8.0 pkgbuild_1.4.6 withr_3.0.2

#> [58] gdtools_0.4.1 scales_1.3.0 rmarkdown_2.29

#> [61] matrixStats_1.5.0 gridExtra_2.3 ragg_1.3.3

#> [64] coda_0.19-4.1 evaluate_1.0.3 knitr_1.49

#> [67] viridisLite_0.4.2 rstantools_2.4.0 rlang_1.1.5

#> [70] Rcpp_1.0.14 glue_1.8.0 rstudioapi_0.17.1

#> [73] jsonlite_1.9.1 R6_2.6.1 systemfonts_1.2.1

#> [76] fs_1.6.5